One reason for the recent advances in machine learning is the large amount of annotated data. The creation of ImageNet was important in the area of computer vision. ImageNet is a collection of thousands of annotated images. For each image, there is a short term describing what is displayed, e.g., a house or a child. In a long essay Kate Crawford and Trevor Paglen describe the problematic creation of ImagetNet. They also point out some strange descriptions of humans in ImagetNet, i.e., “drug addict”, “loser” or “slut”. In this article, I reconstruct how the famous UK TV Cook Nigella Lawson ended up marked as “slut” in ImageNet.

Before we can come to ImageNet, we have to speak about WordNet. The things (concepts) that are present in ImagetNet are derived from WordNet.

WordNet: a database of words

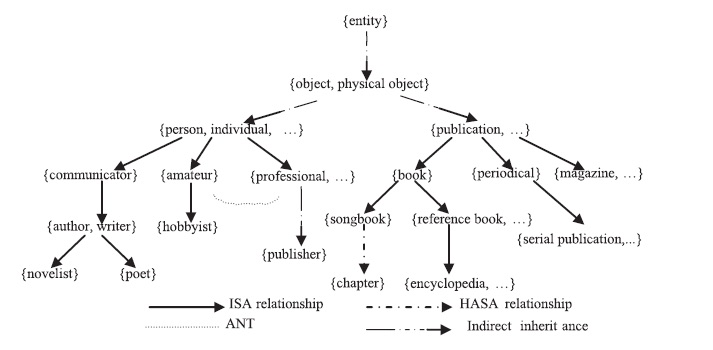

WordNet is a database of words (we only focus on English). WordNet groups synonyms of concepts in so-called synsets (set of synonyms). A writer and an author are the same synset. And synsets have a hierarchical relationship. So you can say the synset “book” and the synset “magazin” are a special form of the synset “publications”.

Hierarchical relationship in WordNet: Source

The creators of ImageNet choose WordNet because of its hierarchical structure. However, WordNet does not represent the reality as a WordNet creator writes on a presentation slide:

Modifications, additions were done piecemeal, often determined by a particular funder e.g. Navy grant motivated entries like head: (nautical) a toilet on a boat or ship

So there are a lot of navy terms in WordNet because the US Navy funded research. In their retrospective presentation, the WordNet contributor wants to “proceed systematically” in modifying WordNet. It’s fair to say that WordNet – even though it’s widely used – was created not systematically. It was not intended to be used as a reference for the English language. The researchers were mostly psychologists who studied how humans perceive words/concepts.

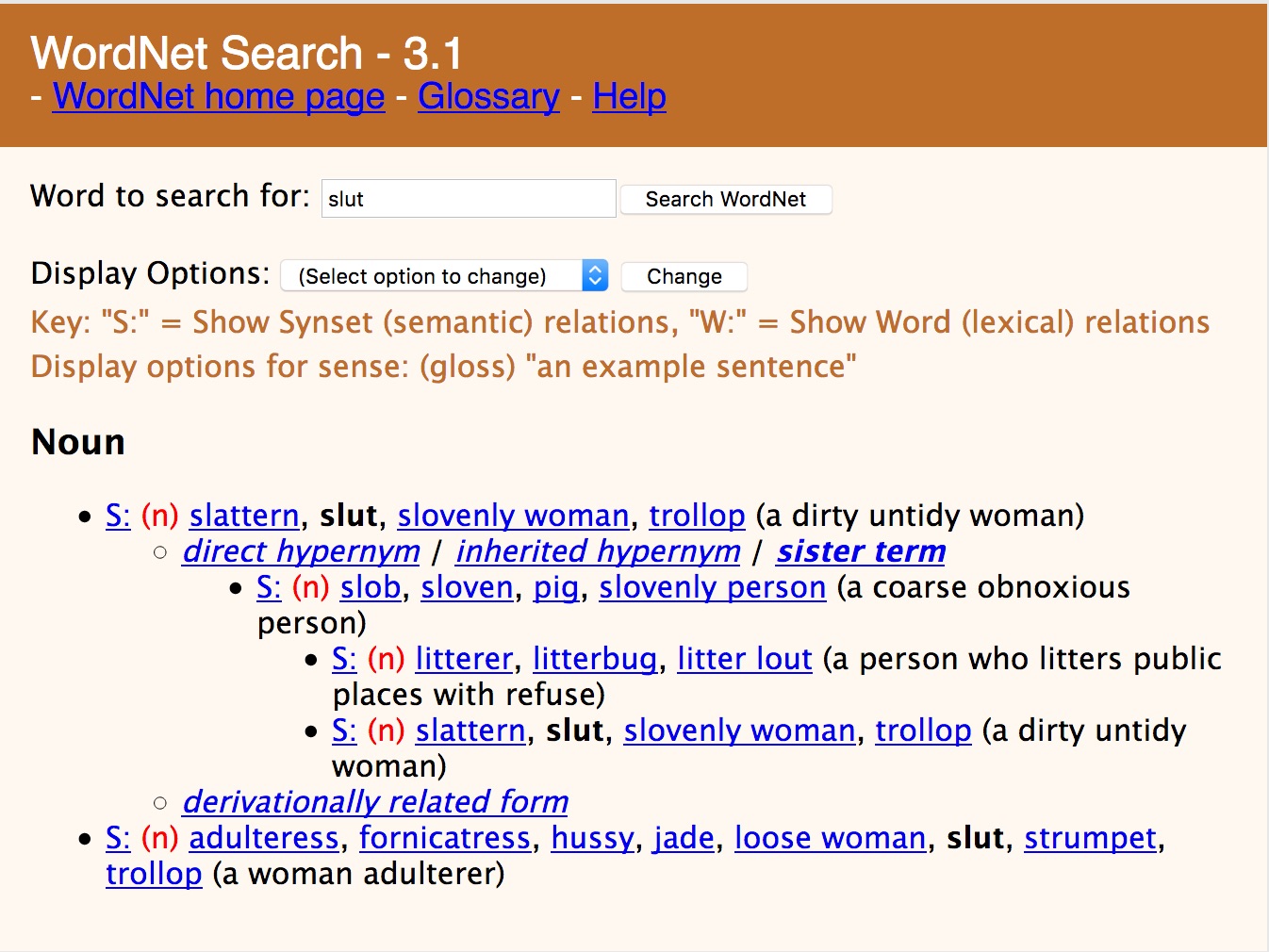

The synset for “slut” lists two meanings of the term. The first meaning refers to an old meaning of the word: slattern, slut […] is “a dirt untidy woman”. According to a modern dictionary, a dated meaning. Nevertheless, the creators of ImageNet chose WordNet as a starting point.

“Slut” in WordNet: Source

How the images for ImageNet were collected

The images for ImageNet were selected in three steps:

1. Select 20,000 synsets from WordNet

WordNet consists of 40,000 synset. But only 20,000 were used for ImageNet. I didn’t find any explanation of how exactly the ImageNet creators chose their synsets. Maybe they tried out the methods (below) and it only worked in half of the cases However, “slut” ended up being in the chosen 20,000 synsets.

2. Scrape images from the Web

Then they had to find images on the Web for the chosen 20,000 synsets. They used automation and search engines such as Google Images for this. They entered the terms from the synset (one at a time) and considered all resulting images as candidate images. So for slut, they entered “slut” or “slattern” and considered those images as slut. But search engines used to limit their results to 100 results. To circumvent this, they appended terms to the query from “parent” synsets. So in our case, they search for “slut woman” and “slattern woman”. Since “slut” and “slattern” are both a child of “woman”.

3. Refine dataset via crowdsourcing

How workers on Amazon Mechanical Turk verified the images: Source

In the last step, they manually checked if the images actually match their query term. Since the ImageNet creators were aware that the search results often do not match, they needed human verification. They used 48940 workers from 167 countries via Amazon Mechanial Turk to filter out the candidate images. The workers were presented candidate images and the definition of the synset. Then, the workers had to select those images that matched the definition.

How Nigella Lawson was labeled as “slut”

And here is a likely explanation of how it all happened.

Nigella Lawson was described as “slattern” in an online news article, and “woman” was in the article’s headline. Very likely this image was a Google Image result for the query “slattern woman”. Since “slattern” was also in the synset “slut”, it was then attached to the “slut”.

Then in the candidate selection, some crowdsourcers said that she was a “slut” / “slattern”. Very likely, the crowdsourcers didn’t follow the WordNet definition of “slut”.

A screenshot of a Telegraph article describing Nigella Lawson: Source

There are 187 other images in the category “slut”. I tracked down the origin for four of those images; some images of “sluts” came from Flickr. None of those displayed on the images gave their consent to be in the image database.

ImageNet is not the only image database. Adam Harvey et al. have reconstructed the origins of various faces database at exposing.ai. They even set up a search mask to check if you Flickr images are unwillingy part of a face database exposing.ai/search.